When you think of MWC Barcelona, chances are you’re thinking about the newest smartphones and other mobile gadgets, but that’s only half the story. Actually, it’s probably far less than half the story because the majority of the business that’s done at MWC is enterprise telco business. Not too long ago, that business was all about selling expensive proprietary hardware. Today, it’s about moving all of that into software — and a lot of that software is open source.

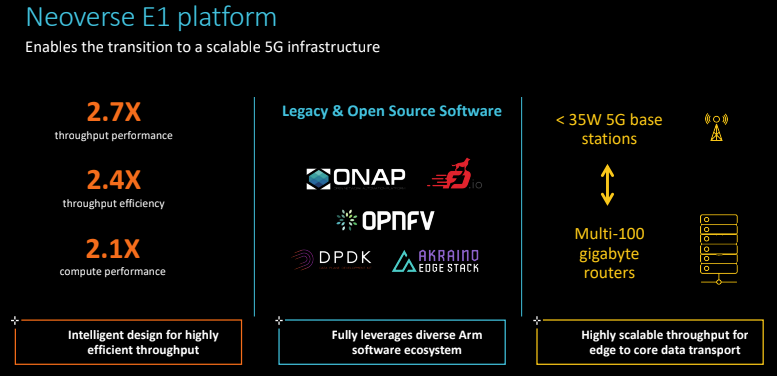

It’s maybe no surprise then that this year, the Linux Foundation (LF) has its own booth at MWC. It’s not massive, but it’s big enough to have its own meeting space. The booth is shared by the three LF projects: the Cloud Native Computing Foundation (CNCF), Hyperleger and Linux Foundation Networking, the home of many of the foundational projects like ONAP and the Open Platform for NFV (OPNFV) that power many a modern network. And with the advent of 5G, there’s a lot of new market share to grab here.

To discuss the CNCF’s role at the event, I sat down with Dan Kohn, the executive director of the CNCF.

To discuss the CNCF’s role at the event, I sat down with Dan Kohn, the executive director of the CNCF.

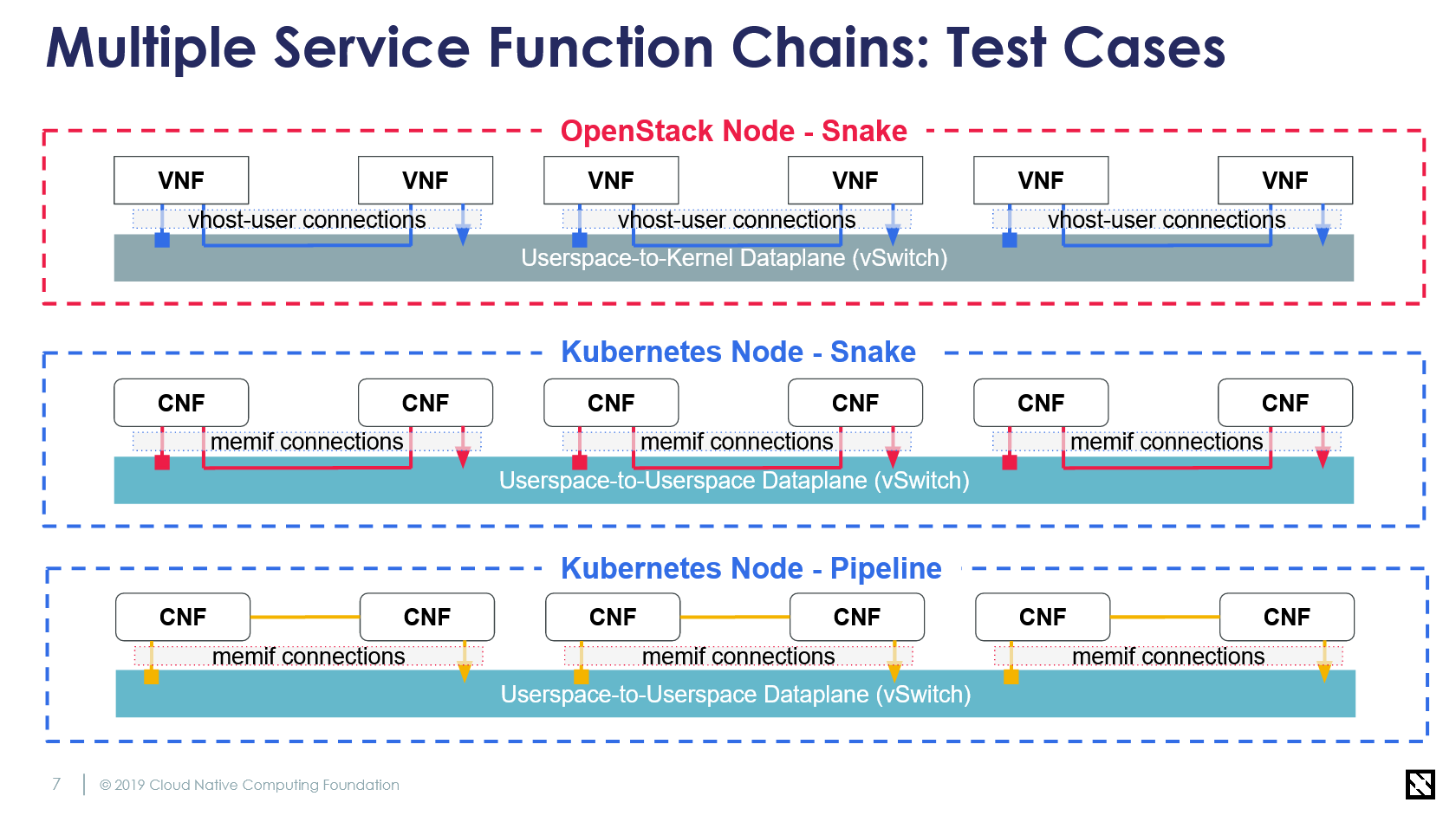

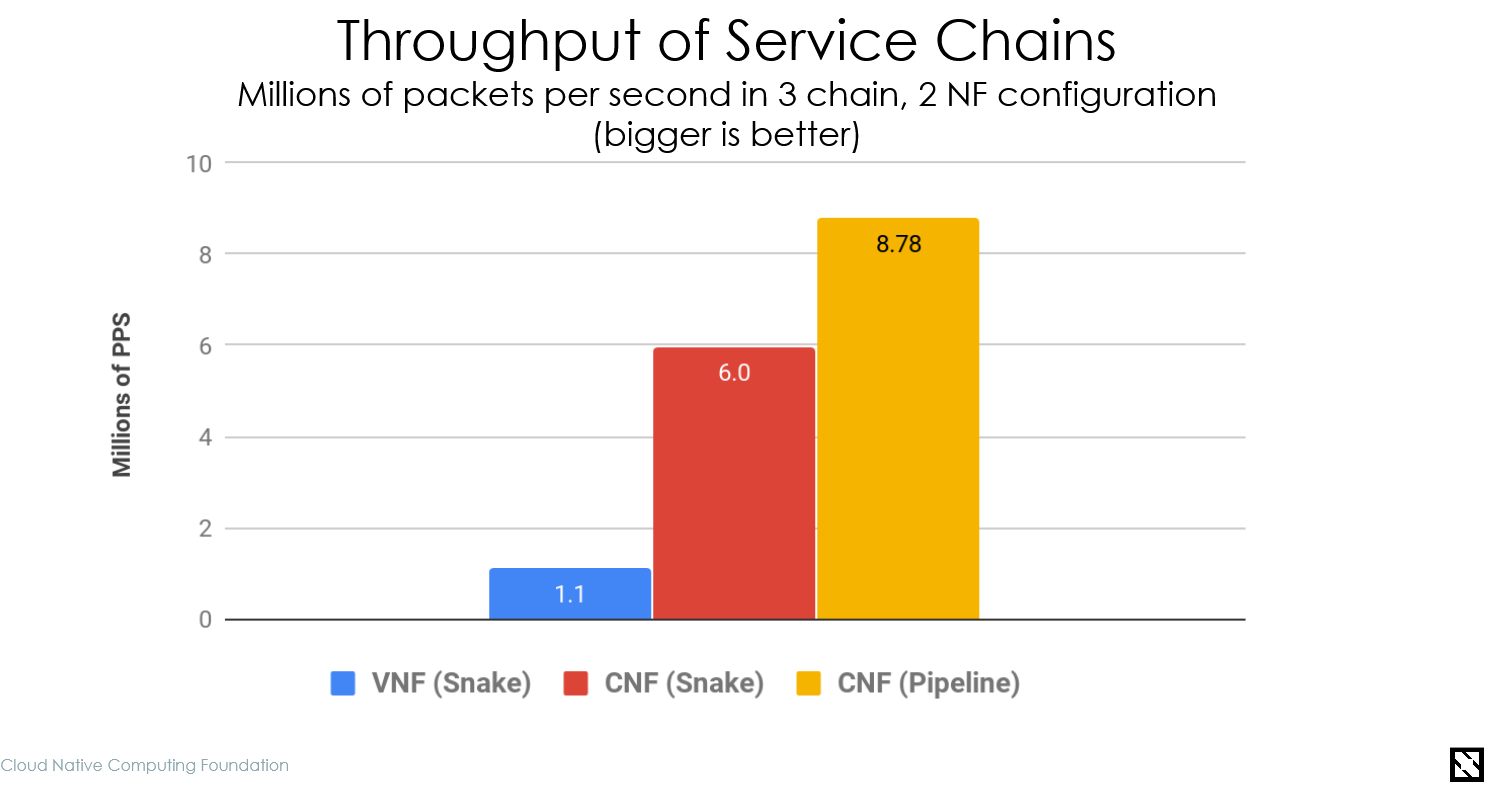

At MWC, the CNCF launched its testbed for comparing the performance of virtual network functions on OpenStack and what the CNCF calls cloud-native network functions, using Kubernetes (with the help of bare-metal host Packet). The project’s results — at least so far — show that the cloud-native container-based stack can handle far more network functions per second than the competing OpenStack code.

“The message that we are sending is that Kubernetes as a universal platform that runs on top of bare metal or any cloud, most of your virtual network functions can be ported over to cloud-native network functions,” Kohn said. “All of your operating support system, all of your business support system software can also run on Kubernetes on the same cluster.”

OpenStack, in case you are not familiar with it, is another massive open-source project that helps enterprises manage their own data center software infrastructure. One of OpenStack’s biggest markets has long been the telco industry. There has always been a bit of friction between the two foundations, especially now that the OpenStack Foundation has opened up its organizations to projects that aren’t directly related to the core OpenStack projects.

I asked Kohn if he is explicitly positioning the CNCF/Kubernetes stack as an OpenStack competitor. “Yes, our view is that people should be running Kubernetes on bare metal and that there’s no need for a middle layer,” he said — and that’s something the CNCF has never stated quite as explicitly before but that was always playing in the background. He also acknowledged that some of this friction stems from the fact that the CNCF and the OpenStack foundation now compete for projects.

OpenStack Foundation, unsurprisingly, doesn’t agree. “Pitting Kubernetes against OpenStack is extremely counterproductive and ignores the fact that OpenStack is already powering 5G networks, in many cases in combination with Kubernetes,” OpenStack COO Mark Collier told me. “It also reflects a lack of understanding about what OpenStack actually does, by suggesting that it’s simply a virtual machine orchestrator. That description is several years out of date. Moving away from VMs, which makes sense for many workloads, does not mean moving away from OpenStack, which manages bare metal, networking and authentication in these environments through the Ironic, Neutron and Keystone services.”

Similarly, ex-OpenStack Foundation board member (and Mirantis co-founder) Boris Renski told me that “just because containers can replace VMs, this doesn’t mean that Kubernetes replaces OpenStack. Kubernetes’ fundamental design assumes that something else is there that abstracts away low-level infrastructure, and is meant to be an application-aware container scheduler. OpenStack, on the other hand, is specifically designed to abstract away low-level infrastructure constructs like bare metal, storage, etc.”

This overall theme continued with Kohn and the CNCF taking a swipe at Kata Containers, the first project the OpenStack Foundation took on after it opened itself up to other projects. Kata Containers promises to offer a combination of the flexibility of containers with the additional security of traditional virtual machines.

“We’ve got this FUD out there around Kata and saying: telco’s will need to use Kata, a) because of the noisy neighbor problem and b) because of the security,” said Kohn. “First of all, that’s FUD and second, micro-VMs are a really interesting space.”

He believes it’s an interesting space for situations where you are running third-party code (think AWS Lambda running Firecracker) — but telcos don’t typically run that kind of code. He also argues that Kubernetes handles noisy neighbors just fine because you can constrain how many resources each container gets.

It seems both organizations have a fair argument here. On the one hand, Kubernetes may be able to handle some use cases better and provide higher throughput than OpenStack. On the other hand, OpenStack handles plenty of other use cases, too, and this is a very specific use case. What’s clear, though, is that there’s quite a bit of friction here, which is a shame.

Finally, Mixmax now also features a Salesforce-linked dialer widget for making calls right from the Chrome extension.

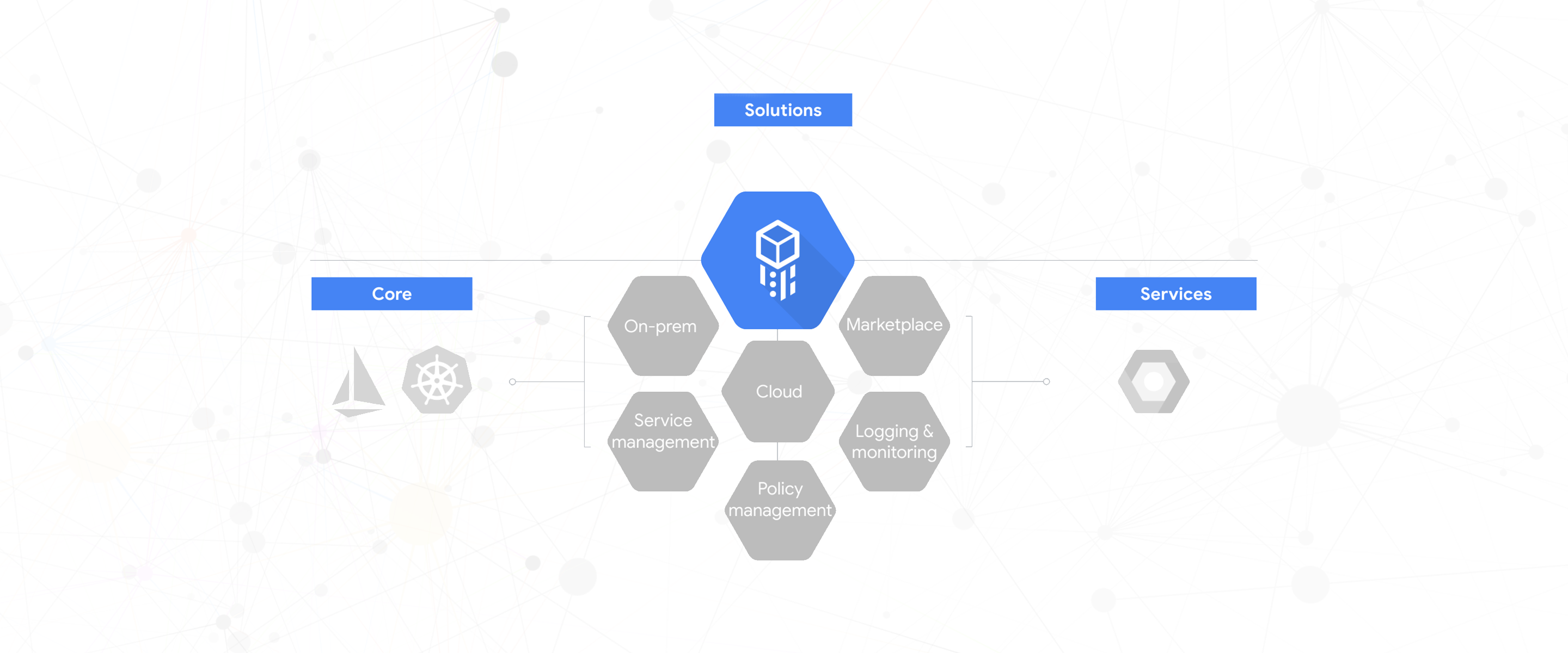

Finally, Mixmax now also features a Salesforce-linked dialer widget for making calls right from the Chrome extension. As Google Cloud engineering director Chen Goldberg told me, the idea here it to help enterprises innovate and modernize. “Clearly, everybody is very excited about cloud computing, on-demand compute and managed services, but customers have recognized that the move is not that easy,” she said and noted that the vast majority of enterprises are adopting a hybrid approach. And while containers are obviously still a very new technology, she feels good about this bet on the technology because most enterprises are already adopting containers and Kubernetes — and they are doing so at exactly the same time as they are adopting cloud and especially hybrid clouds.

As Google Cloud engineering director Chen Goldberg told me, the idea here it to help enterprises innovate and modernize. “Clearly, everybody is very excited about cloud computing, on-demand compute and managed services, but customers have recognized that the move is not that easy,” she said and noted that the vast majority of enterprises are adopting a hybrid approach. And while containers are obviously still a very new technology, she feels good about this bet on the technology because most enterprises are already adopting containers and Kubernetes — and they are doing so at exactly the same time as they are adopting cloud and especially hybrid clouds.