I’m not allowed to tell you exactly how Anchorage keeps rich institutions from being robbed of their cryptocurrency, but the off-the-record demo was damn impressive. Judging by the $17 million Series A this security startup raised last year led by Andreessen Horowitz and joined by Khosla Ventures, Max Levchin, Elad Gil, Mark McCombe of Blackrock, and AngelList’s Naval Ravikant, I’m not the only one who thinks so. In fact, crypto funds like Andreessen’s a16zcrypto, Paradigm, and Electric Capital are already using it.

They’re trusting in the guys who engineered Square’s first encrypted card reader and Docker’s security protocols. “It’s less about us choosing this space and more about this space choosing us. If you look our backgrounds and you look at the problem, it’s like the universe handed us on a silver platter the venn diagram of our skillset” co-founder Diogo Monica tells me.

Today, Anchorage is coming out of stealth and launching its cryptocurrency custody service to the public. Anchorage holds and safeguards crypto assets for institutions like hedge funds and venture firms, and only allows transactions verified by an array of biometrics, behavioral analysis, and human reviewers. And since it doesn’t use “buried in the backyard” cold storage, asset holders can actually earn rewards and advantages for participating in coin-holder votes without fear of getting their Bitcoin, Ethereum, or other coins stolen.

The result is a crypto custody service that could finally lure big-time commercial banks, endowments, pensions, mutual funds, and hedgies into the blockchain world. Whether they seek short-term gains off of crypto volatility or want to HODL long-term while participating in coin governance, Anchorage promises to protect them.

Evolving Past “Pirate Security”

Anchorage’s story starts eight years ago when Monica and his co-founder Nathan McCauley met after joining Square the same week. Monica had been getting a PhD in distributed systems while McCauley designed anti-reverse engineering tech to keep US military data from being extracted from abandoned tanks or jets. After four years of building systems that would eventually move over $80 billion per year in credit card transactions, they packaged themselves as a “pre-product acquihire” Monica tells me, and they were snapped up by Docker.

As their reputation grew from work and conference keynotes, cryptocurrency funds started reaching out for help with custody of their private keys. One had lost a passphrase and the $1 million in currency it was protecting in a display of jaw-dropping ignorance. The pair realized there were no true standards in crypto custody, so they got to work on Anchorage.

“You look at the status quo and it was and still is cold storage. It’s the same technology used by pirates in the 1700s” Monica explains. “You bury your crypto in a treasure chest and then you make a treasure map of where those gold coins are” except with USB keys, security deposit boxes, and checklists. “We started calling it Pirate Custody.” Anchorage set out to develop something better — a replacement for usernames and passwords or even phone numbers and two-factor authentication that could be misplaced or hijacked.

This led them to Andreessen Horowitz partner and a16zcrypto leader Chris Dixon, who’s now on their board. “We’ve been buying crypto assets running back to Bitcoin for years now here at a16zcrypto and it’s hard to do it in a way that’s secure, regulatory compliant, and lets you access it. We felt this pain point directly.”

Andreessen Horowith partner and Anchorage board member Chris Dixon

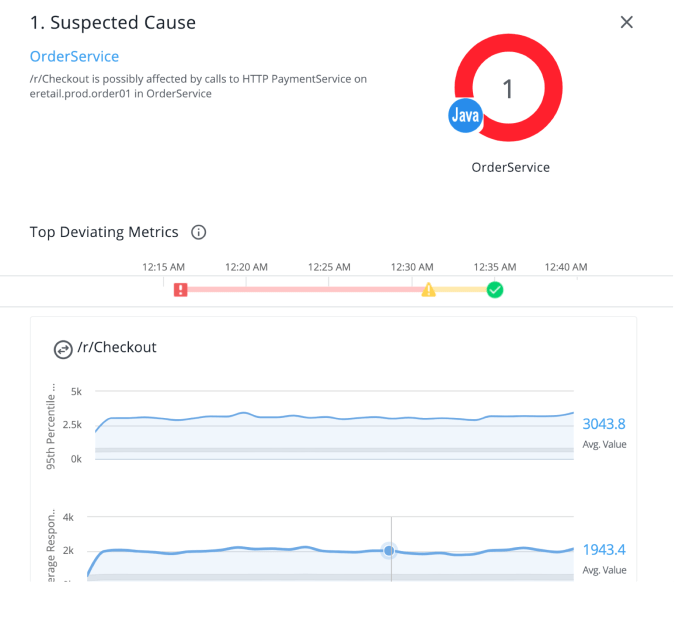

it’s at this point in the conversation when Monica and McCauley give me their off the record demo. While there are no screenshots to share, the enterprise security suite they’ve built has the polish of a consumer app like Robinhood. What I can say is that Anchorage works with clients to whitelist employees’ devices. It then uses multiple types of biometric signals and behavioral analytics about the person and device trying to log in to verify their identity.

But even once they have access, Anchorage is built around quorum-based approvals. Withdrawls, other transactions, and even changing employee permissions requires approval from multiple users inside the client company. They could set up Anchorage so it requires five of seven executives’ approval to pull out assets. And finally, outlier detection algorithms and a human review the transaction to make sure it looks legit. A hacker or rogue employee can’t steal the funds even if they’re logged in since they need consensus of approval.

That kind of assurance means institutional investors can confidently start to invest in crypto assets. That swell of capital could help replace the retreating consumer investors who’ve fled the market this year leading to massive price drops. The liquidity provided by these asset managers could keep the whole blockchain industry moving. “Institutional investing has had centuries to build up a set of market infrastructure. Custody was something that for other asset classes was solved hundreds of years ago so it’s just now catching up [for crypto]” says McCauley. “We’re creating a bigger market in and of itself” Monica adds.

With Anchorage steadfastly handling custody, the risk these co-founders admit worries them lies in the smart contracts that govern the cryptocurrencies themselves. “We need to be extremely wide in our level of support and extremely deep because each blockchain has details of implementation. This is inherently a very difficult problem” McCauley explains. It doesn’t matter if the coins are safe in Anchorage’s custody if a janky smart contract can botch their transfer.

There are plenty of startups vying to offer crypto custody, ranging from Bitgo and Ledger to well-known names like Coinbase and Gemini. Yet Anchorage offers a rare combination of institutional-since-day-one security rigor with the ability to participate in votes and governance of crypto assets that’s impossible if they’re in cold storage. Down the line, Anchorage hints that it might serve clients recommendations for how to vote to maximize their yield and preserve the sanctity of their coin.

They’ll have crypto investment legend Chris Dixon on their board to guide them. “What you’ll see is in the same way that institutional investors want to buy stock in Facebook and Google and Netflix, they’ll want to buy the equivalent in the world 10 years from now and do that safely” Dixon tells me. “Anchorage will be that layer for them.”

But why do the Anchorage founders care so much about the problem? McCauley concludes that “When we look at what’s potentially possible with crypto, there a fundamentally more accessible economy. We view ourselves as a key component of bringing that future forward.”

Businesses can now also create as many teams as they would like and admins will get more controls over how files are shared and with whom they can be shared. That doesn’t seem like an especially interesting feature, but because many larger organizations work with customers outside of the company, it’s something that will make Figma more interesting to these large companies.

Businesses can now also create as many teams as they would like and admins will get more controls over how files are shared and with whom they can be shared. That doesn’t seem like an especially interesting feature, but because many larger organizations work with customers outside of the company, it’s something that will make Figma more interesting to these large companies.