Companies are on the hunt for ways to reduce the time and money it costs their employees to perform repetitive tasks, so today a startup that has built a business to capitalize on this is announcing a huge round of funding to double down on the opportunity.

UiPath — a robotic process automation startup originally founded in Romania that uses artificial intelligence and sophisticated scripts to build software to run these tasks — today confirmed that it has closed a Series D round of $568 million at a post-money valuation of $7 billion.

From what we understand, the startup is “close to profitability” and is going to keep growing as a private company. Then, an IPO within the next 12-24 months the “medium term” plan.

“We are at the tipping point. Business leaders everywhere are augmenting their workforces with software robots, rapidly accelerating the digital transformation of their entire business and freeing employees to spend time on more impactful work,” said Daniel Dines, UiPath co-founder and CEO, in a statement. “UiPath is leading this workforce revolution, driven by our core determination to democratize RPA and deliver on our vision of a robot helping every person.”

This latest round of funding is being led by Coatue, with participation from Dragoneer, Wellington, Sands Capital, and funds and accounts advised by T. Rowe Price Associates, Accel, Alphabet’s CapitalG, Sequoia, IVP and Madrona Venture Group.

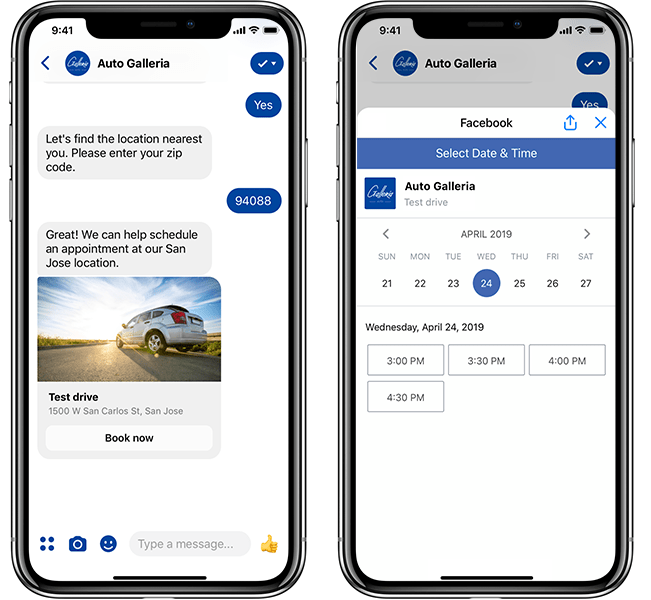

CFO Marie Myers said in an interview in London that the plan will be to use this funding to expand UiPath’s focus into more front-office and customer-facing areas, such as customer support and sales.

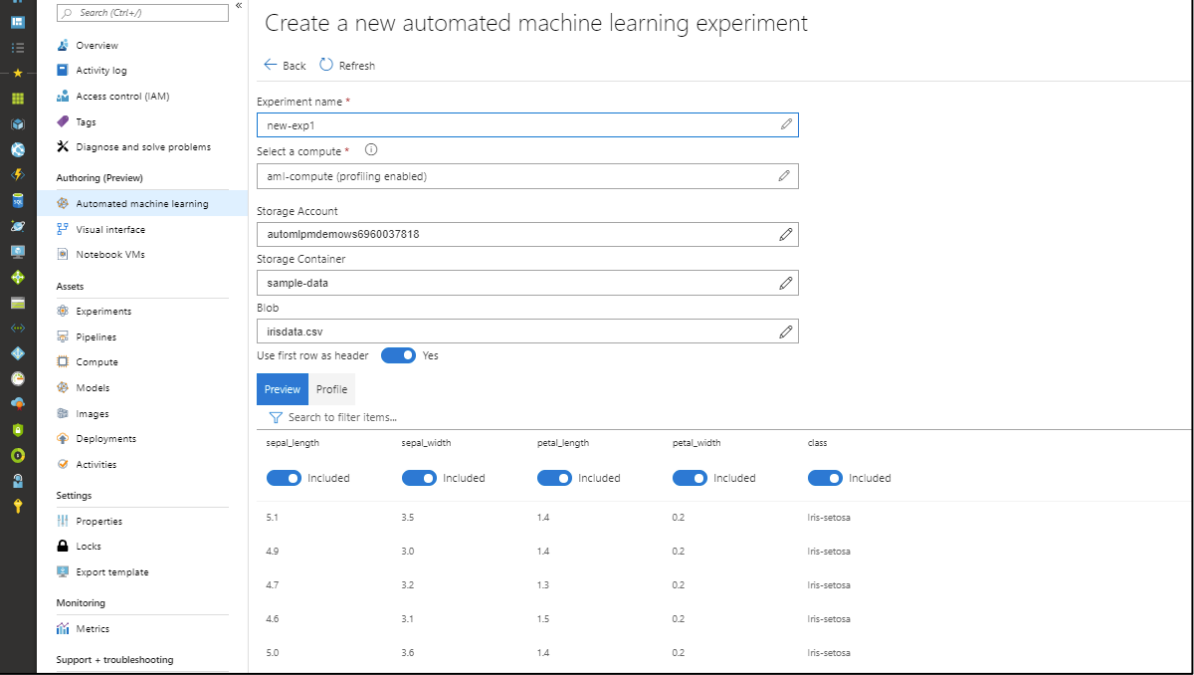

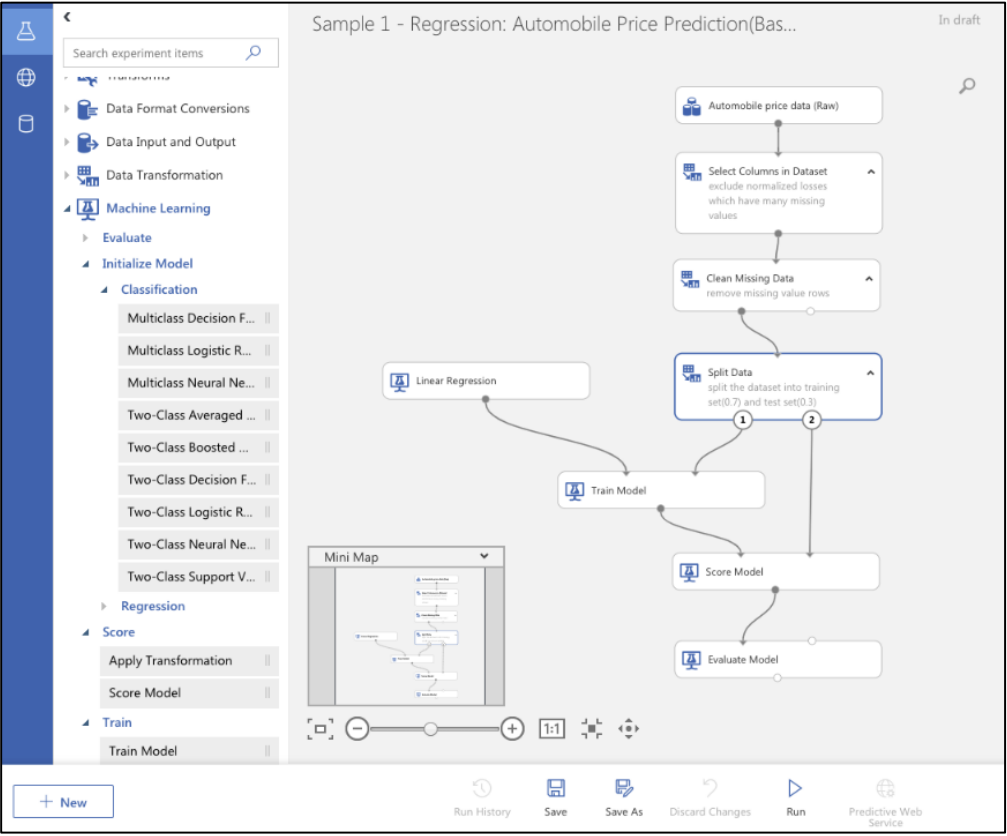

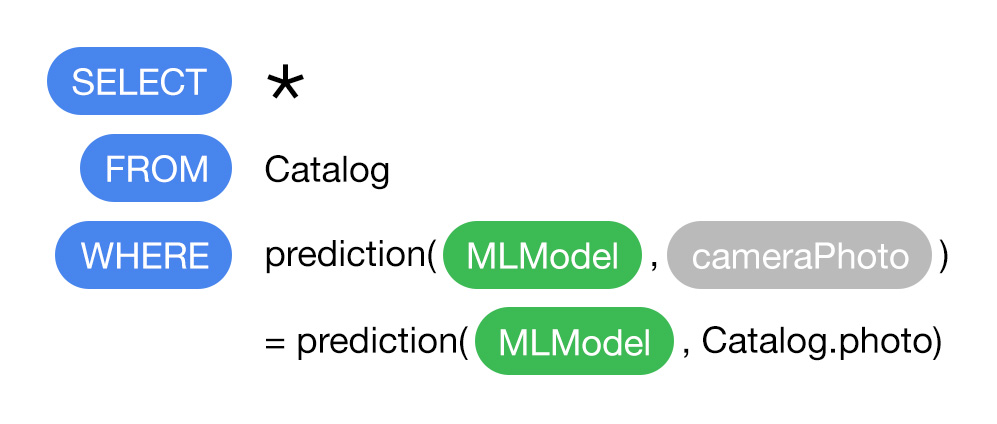

“We want to move into automation into new levels,” she said. “We’re advancing quickly into AI and the cloud, with plans to launch a new AI product in the second half of the year that we believe will demystify it for our users.” The product, she added, will be focused around “drag and drop” architecture and will work both for attended and unattended bots — that is, those that work as assistants to humans, and those that work completely on their own. “Robotics has moved out of the back office and into the front office, and the time is right to move into intelligent automation.”

Today’s news confirms Kate’s report from last month noting that the round was in progress: in the end, the amount UiPath raised was higher than the target amount we’d heard ($400 million), with the valuation on the more “conservative” side (we’d said the valuation would be higher than $7 billion).

“Conservative” is a relative term here. The company has been on a funding tear in the last year, raising $418 million ($153 million at Series A and $265 million at Series B) in the space of 12 months, and seeing its valuation go from a modest $110 million in April 2017 to $7 billion today, just two years later.

Up to now, UiPath has focused on internal and back-office tasks in areas like accounting, human resources paperwork, and claims processing — a booming business that has seen UiPath expand its annual run rate to more than $200 million (versus $150 million six months ago) and its customer base to more than 400,000 people.

Customers today include American Fidelity, BankUnited, CWT (formerly known as Carlson Wagonlit Travel), Duracell, Google, Japan Exchange Group (JPX), LogMeIn, McDonalds, NHS Shared Business Services, Nippon Life Insurance Company, NTT Communications, Orange, Ricoh Company, Ltd., Rogers Communications, Shinsei Bank, Quest Diagnostics, Uber, the US Navy, Voya Financial, Virgin Media, and World Fuel Services.

Moving into more front-office tasks is an ambitious but not surprising leap for UiPath: looking at that customer list, it’s notable that many of these organizations have customer-facing operations, often with their own sets of repetitive processes that are ripe for improving by tapping into the many facets of AI — from computer vision to natural language processing and voice recognition, through to machine learning — alongside other technology.

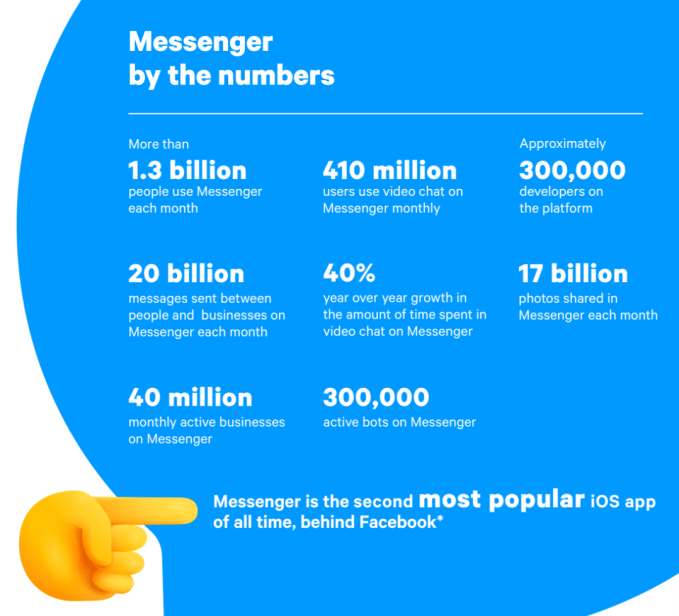

It also begs the question of what UiPath might look to tackle next. Having customer-facing tools and services is one short leap from building consumer services, an area where the likes of Amazon, Google, Apple and Microsoft are all pushing hard with devices and personal assistant services. (That would indeed open up the competitive landscape quite a lot for UiPath, beyond the list of RPA companies like AutomationAnywhere, Kofax and Blue Prism who are its competitors today.)

Robotics has been given a somewhat bad rap in the world of work: critics worry that they are “taking over all the jobs“, removing humans and their own need to be industrious from the equation; and in the worst-case scenarios, the work of a robot lacks the nuance and sophsitication you get from the human touch.

UiPath and the bigger area of RPA are interesting in this regard: the aim (the stated aim, at least) isn’t to replace people, but to take tasks out of their hands to make it easier for them to focus on the non-repetitive work that “robots” — and in the case of UiPath, software scripts and robots — cannot do.

Indeed, that “future of work” angle is precisely what has attracted investors.

“UiPath is enabling the critical capabilities necessary to advance how companies perform and how employees better spend their time,” said Greg Dunham, vice president at T. Rowe Price Associates, Inc., in a statement. “The industry has achieved rapid growth in such a short time, with UiPath at the head of it, largely due to the fact that RPA is becoming recognized as the paradigm shift needed to drive digital transformation through virtually every single industry in the world.”

As we’ve written before, the company has has been a big hit with investors because of the rapid traction it has seen with enterprise customers.

There is an interesting side story to the funding that speaks to that traction: Myers, the CFO, came to UiPath by way of one of those engagements: she had been a senior finance executive with HP tasked with figuring out how to make some of its accounting more efficient. She issued an RFP for the work, and the only company she thought really addressed the task with a truly tech-first solution, at a very competitive price, was an unlikely startup out of Romania, which turned out to be UiPath. She became one of the company’s first customers, and eventually Dines offered her a job to help build his company to the next level, which she leaped to take.

“UiPath is improving business performance, efficiency and operation in a way we’ve never seen before,” said Philippe Laffont, founder of Coatue Management, in a statement. “The Company’s rapid growth over the last two years is a testament to the fact that UiPath is transforming how companies manage their resources. RPA presents an enormous opportunity for companies around the world who are embracing artificial intelligence, driving a new era of productivity, efficiency and workplace satisfaction.”