Istio, the service mesh for microservices from Google, IBM, Lyft, Red Hat and many other players in the open source community, launched version 1.0 of its tools today.

If you’re not into service meshes, that’s understandable. Few people are. But Istio is probably one of the most important new open source projects out there right now. It sits at the intersection of a number of industry trends like containers, microservices and serverless computing and makes it easier for enterprises to embrace them. Istio now has over 200 contributors and the code has seen over 4,000 check-ins since the launch of version 0.1.

Istio, at its core, handles the routing, load balancing, flow control and security needs of microservices. It sits on top of existing distributed applications and basically helps them talk to each other securely, while also providing logging, telemetry and the necessary policies that keep things under control (and secure). It also features support for canary releases, which allow developers to test updates with a few users before launching them to a wider audience, something that Google and other webscale companies have long done internally.

“In the area of microservices, things are moving so quickly,” Google product manager Jennifer Lin told me. “And with the success of Kubernetes and the abstraction around container orchestration, Istio was formed as an open source project to really take the next step in terms of a substrate for microservice development as well as a path for VM-based workloads to move into more of a service management layer. So it’s really focused around the right level of abstractions for services and creating a consistent environment for managing that.”

“In the area of microservices, things are moving so quickly,” Google product manager Jennifer Lin told me. “And with the success of Kubernetes and the abstraction around container orchestration, Istio was formed as an open source project to really take the next step in terms of a substrate for microservice development as well as a path for VM-based workloads to move into more of a service management layer. So it’s really focused around the right level of abstractions for services and creating a consistent environment for managing that.”

Even before the 1.0 release, a number of companies already adopted Istio in production, including the likes of eBay and Auto Trader UK. Lin argues that this is a sign that Istio solves a problem that a lot of businesses are facing today as they adopt microservices. “A number of more sophisticated customers tried to build their own service management layer and while we hadn’t yet declared 1.0, we hard a number of customers — including a surprising number of large enterprise customer– say, ‘you know, even though you’re not 1.0, I’m very comfortable putting this in production because what I’m comparing it to is much more raw.'”

IBM Fellow and VP of Cloud Jason McGee agrees with this and notes that “our mission since Istio’s launch has been to enable everyone to succeed with microservices, especially in the enterprise. This is why we’ve focused the community around improving security and scale, and heavily leaned our contributions on what we’ve learned from building agile cloud architectures for companies of all sizes.”

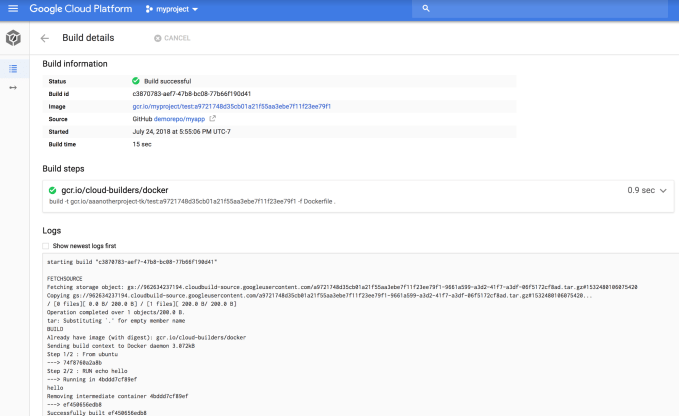

A lot of the large cloud players now support Istio directly, too. IBM supports it on top of its Kubernetes Service, for example, and Google even announced a managed Istio service for its Google Cloud users, as well as some additional open source tooling for serverless applications built on top of Kubernetes and Istio.

Two names missing from today’s party are Microsoft and Amazon. I think that’ll change over time, though, assuming the project keeps its momentum.

Istio also isn’t part of any major open source foundation yet. The Cloud Native Computing Foundation (CNCF), the home of Kubernetes, is backing linkerd, a project that isn’t all that dissimilar from Istio. Once a 1.0 release of these kinds of projects rolls around, the maintainers often start looking for a foundation that can shepherd the development of the project over time. I’m guessing its only a matter of time before we hear more about where Istio will land.

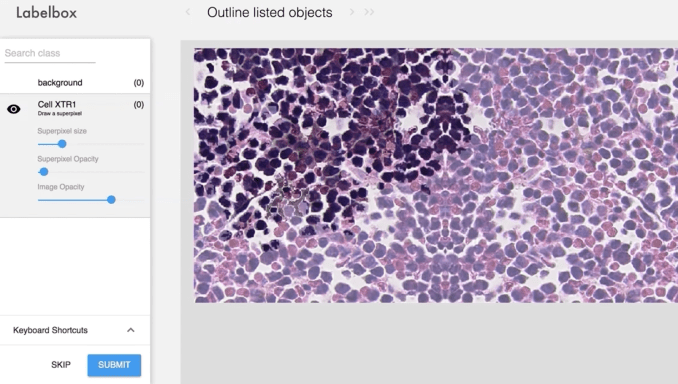

Long-term, the risk for Labelbox is that it’s arrived too early for the AI revolution. Most potential corporate customers are still in the R&D phase around AI, not at scaled deployment into real-world products. The big business isn’t selling the labeling software. That’s just the start. Labelbox wants to continuously managage the fine-tuning data to help optimize an algorithm through its entire lifecycle. That requires AI being part of the actual engineering process. Right now it’s often stuck as an experiment in the lab. “We’re not concerned about our ability to build the tool to do that. Our concern is ‘will the industry get there fast enough?'” Ferreiras declares.

Long-term, the risk for Labelbox is that it’s arrived too early for the AI revolution. Most potential corporate customers are still in the R&D phase around AI, not at scaled deployment into real-world products. The big business isn’t selling the labeling software. That’s just the start. Labelbox wants to continuously managage the fine-tuning data to help optimize an algorithm through its entire lifecycle. That requires AI being part of the actual engineering process. Right now it’s often stuck as an experiment in the lab. “We’re not concerned about our ability to build the tool to do that. Our concern is ‘will the industry get there fast enough?'” Ferreiras declares.

The other major new service Google is launching is Managed Istio (together with Apigee API Management for Istio) to help businesses manage and secure their microservices. The

The other major new service Google is launching is Managed Istio (together with Apigee API Management for Istio) to help businesses manage and secure their microservices. The